通过docker部署xinference,对外暴漏openai兼容的api,供外部软件使用。

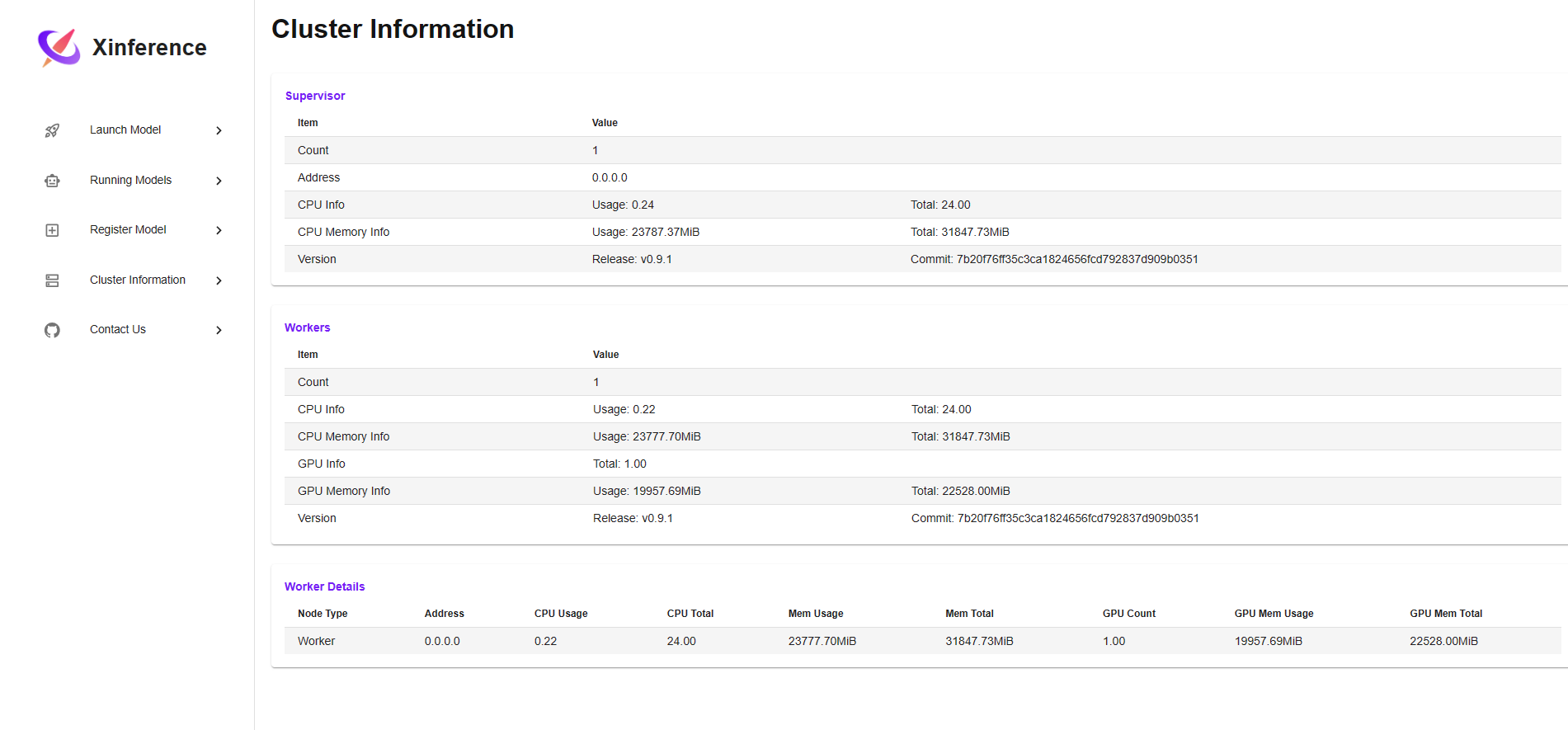

硬件:GTX2080 TI 22G

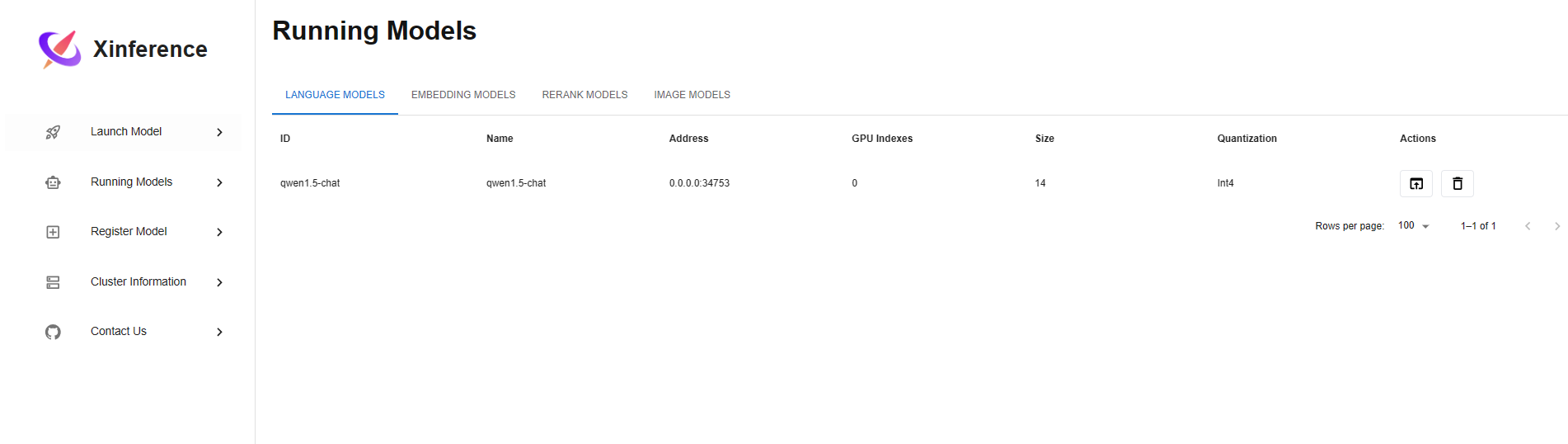

模型:qwen1.5-chat-13b-qint4 + bge-base-zh-v1.5

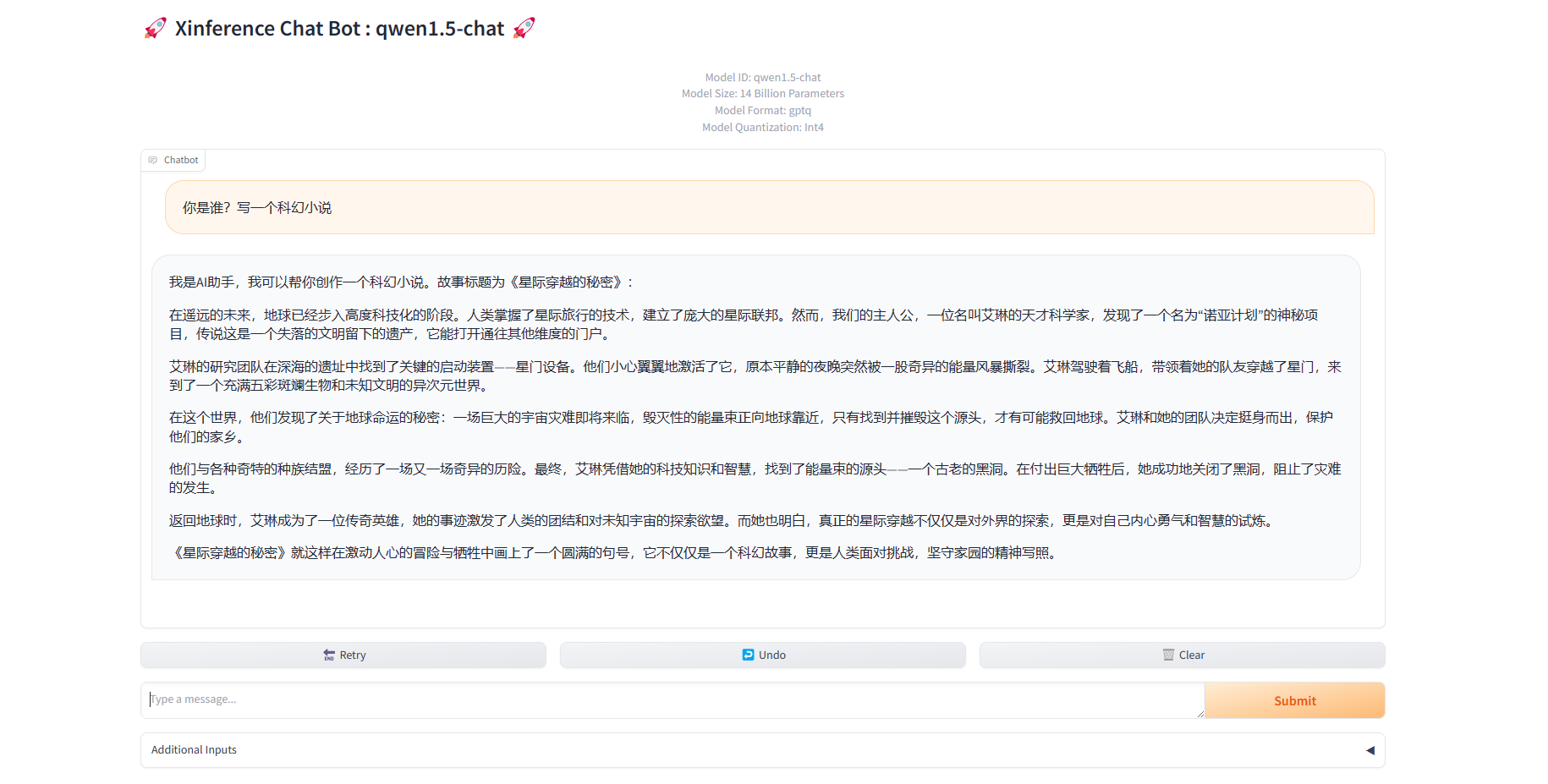

桌面软件:Chatbox

最终实现了qwen的部署,且通过openai的标准API接口,实现了Chatbox接入了私有模型。

xinference docker-compose.yml配置:

version: '3.5'

services:

xinference:

container_name: xinference

hostname: xinference

image: xprobe/xinference:v0.9.1

privileged: true

runtime: nvidia

restart: "no"

environment:

- XINFERENCE_MODEL_SRC=modelscope

volumes:

- ./volumes/xinference/.xinference:/root/.xinference:rw

- ./volumes/xinference/.cache/huggingface:/root/.cache/huggingface:rw

- ./volumes/xinference/.cache/modelscope:/root/.cache/modelscope:rw

command:

- xinference-local

- -H

- 0.0.0.0

- -p

- '9997'

- --log-level

- debug

ports:

- "9997:9997"

- "9931-9940:9931-9940"

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

limits:

memory: 60480M

最终效果